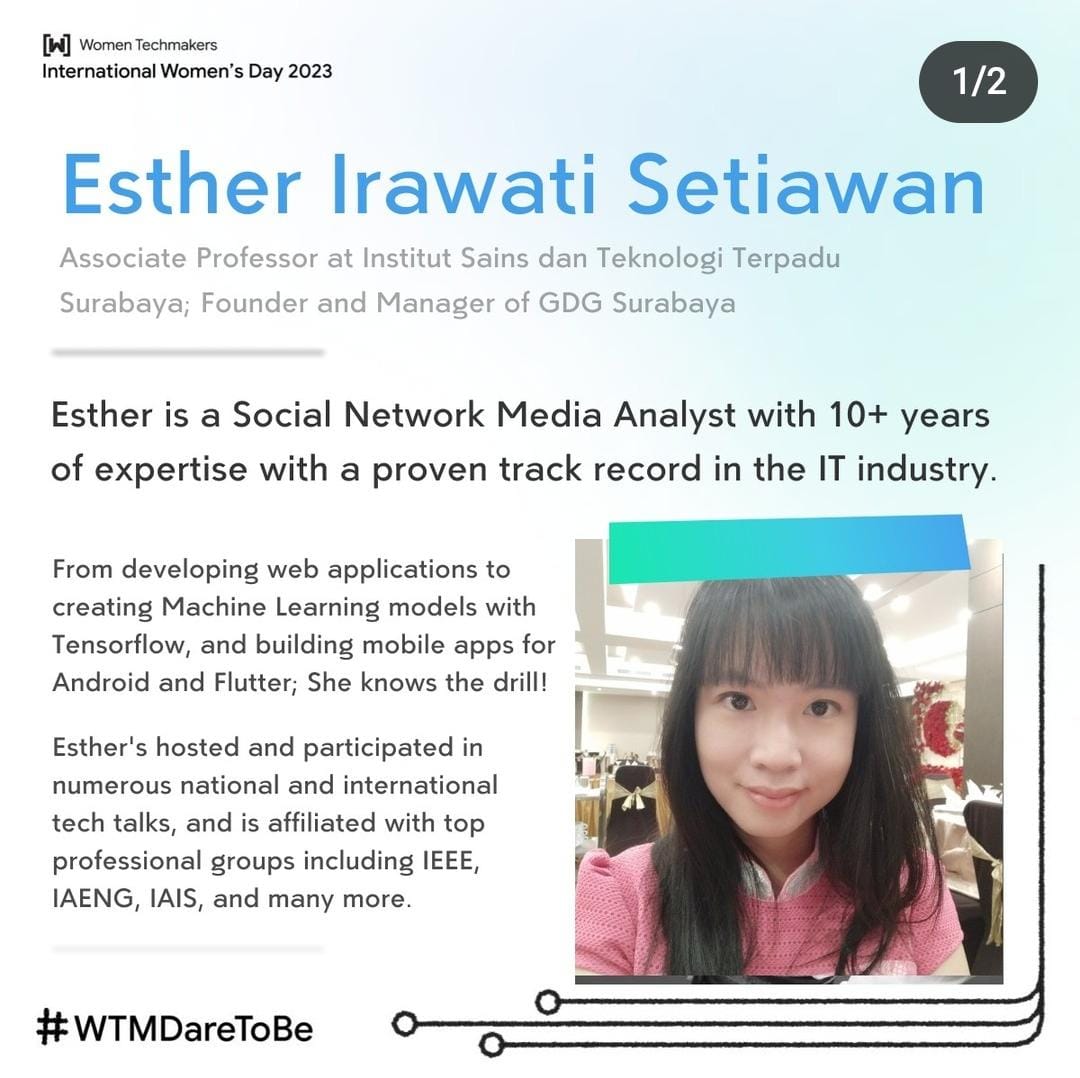

On Saturday, March 11 2023, a seminar was held by Women Techmaker Bali in Balai Diklat Industri Denpasar. The seminar was held with the theme of celebrating the international women’s day. One of the speakers of this seminar is Esther Irawati Setiawan, Associate Professor at Institut Sains dan Teknologi Terpadu Surabya and the founder and manager of GDG Surabaya. In this seminar, she held a talk under the theme of: “Transformers is all We Need A foundation of Large Language Models”

In this talk, Esther Irawati covered about Introduction to Transformers: overview of transformer-based models, their architecture, and how they differ from other types of models. She had a discussion of self-attention and how it enables the model to process input sequences of varying lengths. She also explained about Building a Transformer: participants could learn how to build a transformer model from scratch using a deep learning framework like TensorFlow. This would involve understanding the various layers of the transformer architecture, such as the multi-head attention and feed-forward layers, and how to implement them in code. Esther explained on Pretraining a Language Model: With a basic understanding of transformers in place, the workshop could move on to the task of pretraining a large language model. This could involve explaining the concept of pretraining and the role of large-scale language modeling tasks in advancing the field of natural language processing. Then on Fine-tuning a Language Model: Once a model has been pretrained, it can be fine-tuned for specific downstream tasks such as text classification, sentiment analysis, or question answering. The workshop could cover how to fine-tune a language model for a given task and evaluate its performance. Esther conclude with showing techniques and demonstration using Large Language Models.